We’re pleased to present the results of our detailed assessment of the XMTP protocol’s reliability and performance. This analysis offers clear insights into how the messaging protocol performs across various SDKs, focusing on key operations and dependability.

Our findings come from the xmtp-qa-testing repository, an open-source tool we’ve built for consistent, repeatable performance testing. It runs tests every 30 minutes on nodes in regions like US East, US West, Europe, and Asia, giving us real-world data on everything from encryption to SDK compatibility.

This report shares both our results and our methods. By keeping our testing tools open, we aim to provide developers with trustworthy benchmarks and full transparency into XMTP’s strengths and areas for improvement. Our goal is straightforward: create a messaging protocol that’s secure, fast, and reliable for all users.

— Fabrizio Guespe, QA Engineer for XMTP

Testing scope

This monorepo contains a comprehensive collection of tools for testing and monitoring the XMTP protocol and its implementations.

Architecture

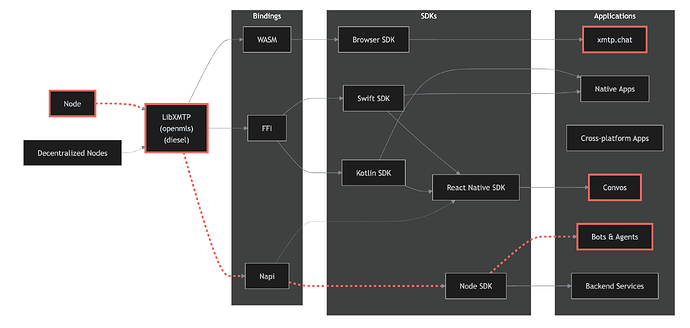

This flowchart illustrates the XMTP protocol’s layered architecture and testing scope:

The highlighted path (red dashed line) in the architecture diagram shows our main testing focus.

LibXMTP is a shared library built in Rust and compiled to WASM, Napi, and FFI bindings. It encapsulates the core cryptography functions of the XMTP messaging protocol. Due to the complexity of the protocol, we are using openmls as the underlying cryptographic library, it’s important to test how this bindings perform in their own language environments.

We can test all XMTP bindings using three main applications. We use xmtp.chat to test the Browser SDK’s Wasm binding in actual web environments. We use Convos to test the React Native SDK, which uses both Swift and Kotlin FFI bindings for mobile devices. We use agents to test the Node SDK’s Napi binding for server functions. This testing method checks the entire protocol across all binding types, making sure different clients work together, messages are saved, and users have the same experience across the XMTP system.

Testing details

- Multi-region testing nodes (

us-east,us-west,asia,europe) - 30-minute automated test execution intervals

- Comprehensive data aggregation in datadog

- Testing directly on top of SDKs for real-world scenarios

devandproductionnetwork covered- Automated testing for web app

xmtp.chat - Manual testing for react native app

- Human & agents testing for real-world simulations

TLDR: Metrics

- Core SDK Performance: Direct message creation (<300ms), group operations (<200-500ms)

- Network Performance: Server call (<100ms), TLS handshake (<100ms), total processing (<300ms)

- Group Scaling: Supports up to 300 members efficiently (create: 9s, operations: <350ms)

- Regional Performance: US/Europe optimal, Asia/South America higher latency (+46-160%)

- Message Reliability: 100% delivery rate (target: 99.9%), perfect ordering

- Environments: Production consistently outperforms Dev network by 5-9%

Operation performance

Core SDK Operations Performance

| Operation | Description | Avg (ms) | Target | Status |

|---|---|---|---|---|

| clientCreate | Creating a client | 254-306 | <350ms | |

| inboxState | Checking inbox state | 300 | <350ms | |

| createDM | Creating a direct message conversation | 200-250 | <350ms | |

| sendGM | Sending a group message | 123-160 | <200ms | |

| receiveGM | Receiving a group message | 90-140 | <200ms | |

| createGroup | Creating a group | 254-306 | <350ms | |

| createGroupByIdentifiers | Creating a group by address | 254-306 | <350ms | |

| syncGroup | Syncing group state | 78-89 | <200ms | |

| updateGroupName | Updating group metadata | 105-160 | <200ms | |

| removeMembers | Removing participants from a group | 110-168 | <250ms | |

| sendGroupMessage | Sending a group message | 100-127 | <200ms | |

| receiveGroupMessage | Processing group message streams | 119-127 | <200ms |

Note: Based on data from 79 measured operations in the us-east region and production network.

Group Operations Performance by Size

| Size | Create(ms) | Send(ms) | Sync(ms) | Update(ms) | Remove(ms) | Target(Create) | Status |

|---|---|---|---|---|---|---|---|

| 50 | 1130 | 71 | 61 | 81 | 140 | <1300ms | |

| 100 | 1278 | 67 | 66 | 91 | 182 | <1400ms | |

| 150 | 1902 | 72 | 85 | 104 | 183 | <2000ms | |

| 200 | 2897 | 73 | 103 | 139 | 211 | <3000ms | |

| 250 | 3255 | 76 | 120 | 164 | 234 | <3500ms | |

| 300 | 5089 | 81 | 321 | 255 | 309 | <5500ms | |

| 350 | 5966 | 89 | 432 | 355 | 409 | <6000ms | |

| 400 | 6542 | 89 | 432 | 355 | 409 | <7000ms | |

| 450 | - | - | - | - | - | - |

Note: Performance increases significantly beyond 350 members, with 400 members representing a hard limit on the protocol.

Networks performance

Network performance

| Performance Metric | Current Performance | Target | Status |

|---|---|---|---|

| DNS Lookup | 50.3ms avg | <100ms | |

| TCP Connection | 105.6ms avg | <200ms | |

| TLS Handshake | 238.9ms avg | <300ms | |

| Processing | 30ms avg | <100ms | |

| Server Call | 238.9ms avg | <300ms |

Note: Performance metrics based on us-east testing on production network.

Regional Network Performance

| Region | Server Call (ms) | TLS (ms) | ~ us-east | Status |

|---|---|---|---|---|

| us-east | 276.6 | 87.2 | Baseline | |

| us-west | 229.3 | 111.1 | -15.6% | |

| europe | 178.5 | 111.4 | -33.2% | |

| asia | 411.0 | 103.7 | +46.5% | |

| south-america | 754.6 | 573.1 | +160.3% |

Note: Baseline is us-east region and production network.

Dev vs Production Network Performance Comparison

| Region | Dev (ms) | Production (ms) | Difference | Status |

|---|---|---|---|---|

| us-east | 294.8 | 276.6 | -6.2% | |

| us-west | 247.1 | 229.3 | -7.2% | |

| europe | 196.3 | 178.5 | -9.1% | |

| asia | 439.8 | 411.0 | -6.5% | |

| south-america | 798.2 | 754.6 | -5.5% |

Note: Production network consistently shows better network performance across all regions, with improvements ranging from 5.5% to 9.1%.

Message reliability

Message delivery testing

| Test Area | Current Performance | Target | Status |

|---|---|---|---|

| Stream Delivery Rate | 100% successful | 99.9% minimum | |

| Poll Delivery Rate | 100% successful | 99.9% minimum | |

| Recovery Rate | 100% successful | 99.9% minimum | |

| Stream Order | 100% in order | 99.9% in order | |

| Poll Order | 100% in order | 99.9% in order | |

| Recovery Order | 100% in order | 99.9% in order |

Note: Testing regularly in groups of 40 active members listening to one user sending 100 messages

Success criteria summary

| Metric | Current Performance | Target | Status |

|---|---|---|---|

| Core SDK Operations | All within targets | Meet defined targets | |

| Group Operations | ≤300 members | ≤300 members on target | |

| Network Performance | All metrics within target | Meet defined targets | |

| Message Delivery | 100% | 99.9% minimum | |

| Stream Message Loss | 100% | 99.9% minimum | |

| Poll Message Loss | 100% | 99.9% minimum | |

| Message Order | 100% | 100% in order | |

| South-america & Asia | more than 40% | <20% difference | |

| US & Europe | less than 20% variance | <20% difference | |

| Dev vs Production | Production 4.5-16.1% better | Production ≥ Dev |

Disclaimers

- Ideal Network Conditions: Real-world performance may vary significantly when the network is under stress or high load.

- Node-sdk only: Metrics are based on node-sdk only operations and are not covering performance across all SDKs.

Tools & Utilities

- Repository: xmtp-qa-testing: This monorepo contains multiple tools for testing and monitoring

- Test bot: Bot for testing with multiple agents - see section

- Workflows: See our CI/CD pipeline configuration - see section

- Vitest: We use Vitest for running tests with an interactive UI - see section

- Railway: Visit our Railway project with all our services - see section

- Gm bot: Bot for testing with older version of the protocol - see section