IMPORTANT: This post contains ideas intended to spark discussion and gather feedback. These are not final concepts and must not be interpreted as implementation details. For official information about how XMTP works, see the XMTP technical documentation. Have questions about this post? We’d love to hear from you—post a reply.

Goal

Describe identity, reputation, and sybil challenges in the network. Propose a simple option for boosting a messaging identity’s reputation using identity verification messaging peers.

Background and relevant context

Identity in XMTP

A social graph exists within XMTP that is made up of messaging nodes, which are connected to other messaging nodes through edges. Each node is represented by an identity. An identity is described by both stated attributes and reputation.

Reputation

An identity’s reputation changes with time. Activities and inactivity, behaviors, and performance determine the identity’s reputation. Critically, reputation is dependent upon other’s perception of specific activities and inactivity, behaviors, and performance, carried out by the identity.

A node’s reputation can be upgraded or degraded by it’s activities and inactivity, behaviors, and performance, and the perception of such by others.

All newly created, unfunded EVM addresses begin life with a neutral reputation.

Attacker motivation

Attackers do not have unlimited resources. They must choose where to deploy resources based on the expected value and cost of the full set of available opportunities. Attackers choose opportunities with the highest return on investment (ROI).

The influx of new users to XMTP has increased the set of positive ROI opportunities for attackers in the form of phishing attacks.

Post delivery filtering and indexing defense

Honest network participants would like to make phishing attacks a negative ROI opportunity. Available strategies include transaction fee mechanisms and post delivery filtering and indexing algorithms (see Spam defense classification). The focus of this proposal is post delivery filtering and indexing algorithms.

These algorithms are developed and maintained by user inbox app providers, and attempt to promote messages from nuetral-to-positive reputation messaging identities, and demote or filter out messages from negative reputation messaging identities. The possibility of phishing messages not reaching the user’s attention lowers the attacker’s expected value, and can result in a negative ROI.

Sybil

A single attacker can control more than one messaging identity in the network. This actor can bypass filtering algorithms by using addresses that have not yet accrued negative reputation.

Motivation

An attacker’s ability to create many new sybil addresses with a neutral reputation increases the potential for positive ROI attacks. This, in turn, leads to more spam in the network.

Proposal

Verifiable identity providers can use the XMTP messaging interface to create identity verification workflows. Honest users can upgrade their reputation by successfully completing the provider’s workflow.

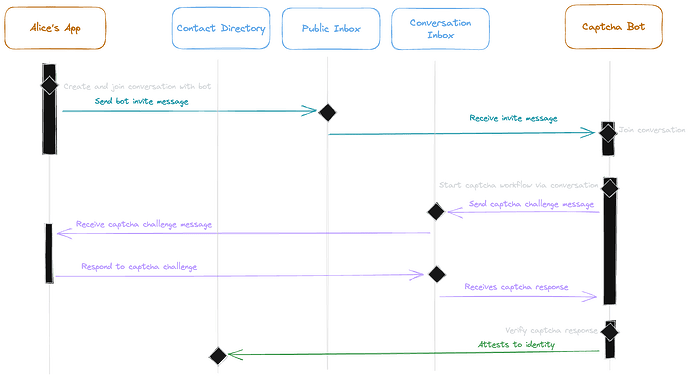

In the above workflow, Alice invites a captcha bot provider to a conversation. The captcha bot joins the conversation with Alice and sends a challenge via conversation message. Alice responds to the challenge. The provider verifies Alice’s response and attests to Alice’s XMTP contact ID. Future message recipients can now independently verify the attestation to Alice’s contact (i.e., Alice’s reputation has been upgraded e.g., from neutral to positive).

Provider workflows may range from simple captcha challenges to requiring sensitive information for identity verification.

Spam mitigation

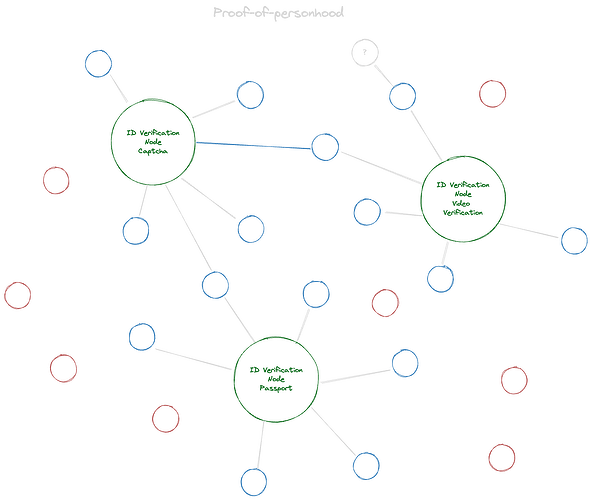

A user that has received attestation from a provider, has effectively created a connection with the provider. A graph of ecosystem messaging identities would look like the below diagram. The blue dots have one or more connections with known identity providers. Blue dots may be considered positive reputation identities by inbox apps, and their invite messages may be promoted in recipient inboxes. Red identities have yet to connect with a provider. Inbox apps may treat red dots similarly to negative reputation identities, and demote their invite messages in recipient inboxes.

XMTP inbox app providers can require proof-of-personhood for new users by simply requiring that a user’s first XMTP contact is an identity provider. This is similar to discord servers requiring verification to join. This reduces bot activity, as an attacker running their own client would be easily distinguishable from users that have joined via inbox app provider workflows that require identity provider verification.

In future versions of the protocol, a recipient user may be able to specify that senders must present proof-of-personhood in order for invite messages to be delivered to their inbox.

The benefits of a message-based workflow

- Identity providers can accelerate distribution by leveraging the XMTP network user base

- Minimize friction for users. Message-based proof-of-personhood workflows are more convenient for users than dedicated web interfaces.

- Multiple identity providers can integrate their services into XMTP, thus providing choice for inbox app developers and users.

Questions

- How is attestation represented?

- Where do recipient inbox apps retrieve attestation state? Is the state included with the message, or must it be looked up somewhere?

- Should attestation state be made public or kept private?

- If it should remain private, how is the information accessible to recipient inbox apps?

- Can identity providers build a message-based workflow that is capable of validating personhood?

- Would this need to be assisted by the protocol in some way?

- How will inbox apps and users safely distinguish between reputable and fraudulent providers?

- What risks does this present to users?